4 November 2025

Why “Modern” Network Defense Systems Resemble Middle Ages Fortifications

By Adrian Perrig (ETH Zürich) and Fritz Steinmann (SIX)

Share this post

In an era increasingly defined by rapid technological advancement and sophisticated digital threats, the current state of “modern” Internet defense systems presents a puzzling paradox. Upon closer inspection, one is struck by the astonishing degree to which these systems still rely on manual and human-intensive processes. This reliance seems fundamentally at odds with the increasingly automated nature of cyber warfare.

We are, after all, in the year 2025. This is a time when malicious actors and advanced persistent threat (APT) groups are no longer limited to rudimentary hacking techniques. Instead, they are increasingly leveraging cutting-edge, sophisticated AI approaches, machine learning algorithms, and automation to orchestrate highly complex and adaptive / evolving attacks. These AI-driven offensive strategies allow attackers to rapidly identify vulnerabilities, automatically craft exploitation code, and dynamically adjust their approach in real time based on the defensive countermeasures.

Given this context, the continued resemblance of our defense systems to “middle ages” weaponry is not just a rhetorical observation, but a critical vulnerability. The disparity between the offensive capabilities powered by artificial intelligence and the often reactive, human-dependent nature of our defenses creates an ever-widening gap. This gap allows attackers to maintain the initiative, and perform network-based attacks before human defenders can even fully comprehend the scope or nature of an ongoing attack. These new challenges require innovative countermeasures, such as the deployment of fundamentally new network technologies to advance the current defense systems beyond their present capabilities.

The Primitive State of “Modern” Network Defense

The prevailing “modern” network packet filtering defense mechanisms appear still quite primitive due to their inability to provide a guarantee to a legitimate client to be able to pass through and reach a service in the presence of an active attacker. Critical infrastructures rely on such guarantees, and thus need to resort to private networks that exclude attackers by design. However, attackers may be able to infiltrate such closed networks, and closed networks typically increase cost and reduce flexibility.

An important aspect to understand why we label today’s industry-standard packet filtering systems as “middle age” defenses is due to their lack of guarantees and manual processes, which is evidenced by their false positives and false negatives. In security terminology, a “positive” stands for an attack packet – a terminology that is similar to medical testing (“a positive test result is negative for the patient, and a negative test result is positive”). A false positive means that the packet filtering falsely labeled a benign packet as a “positive”, that is, a legitimate packet is believed to be an attack packet. Conversely, a false negative means that an attack packet was not recognized and is believed to be benign.

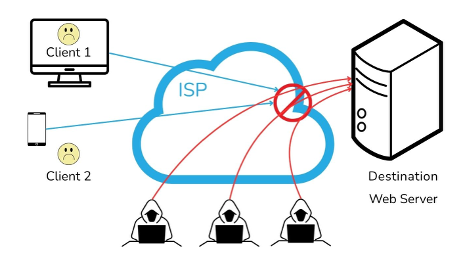

We illustrate these terms based on an example. The first image depicts the case without an adversary: both clients can access the web server through their Internet Service Provider (ISP), so both users are satisfied.

In the presence of an adversary, however, the link from the ISP to the destination can be overloaded, preventing legitimate users from reaching the server. Consequently, the users are very much dissatisfied.

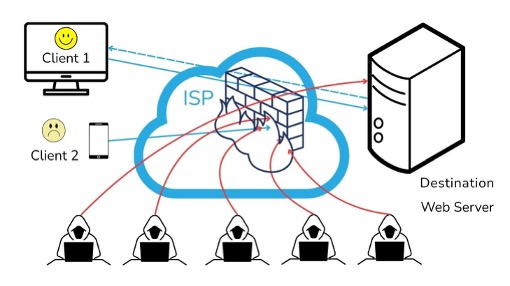

If the ISP has a filtering system in place, as the following diagram depicts as a “firewall”, all packets are inspected by a heuristic which decides if the packet should be forwarded or not. One attacker’s traffic still reaches the web server, which represents a false negative. Most attacker’s traffic is filtered out, representing true positives. One happy client’s traffic is forwarded, representing a true negative, but another client’s traffic is blocked – an example of a false positive.

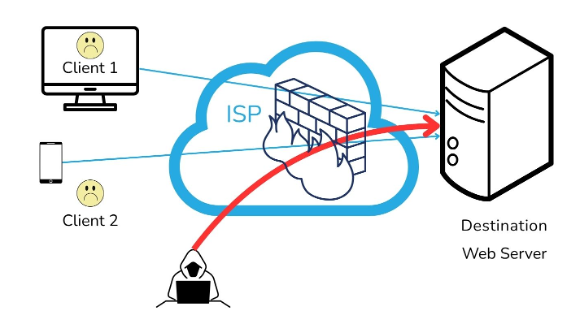

We next illustrate how false negatives can occur. Current network packet filtering defense approaches are making use of heuristics for malicious packet detection, which a sophisticated attacker can deceive to circumvent. A heuristic is in essence a rule-based decision to decide if a packet is benign or malicious, in contrast to a cryptographic approach where the computed output of a cryptographic function enables classification of benign vs. malicious. An example of a rule could be that a packet has to be sent from defined source addresses to a certain application at the destination, or needs to show other characteristics to pass through the filter. The sophisticated attacker can create packets that the heuristic filter will let through, in particular since most packets are encrypted and the filtering system does not have the decryption key. Moreover, the filtering system in typical deployments does not observe the return traffic back to the attacker. So the combination of these two shortcomings enables an attacker to spoof the IP address of a legitimate sender and the malicious packet is thus permitted to bypass the filtering system. The attacker can then follow up with encrypted traffic that is seemingly legitimate, even though there is no open connection to the server. This is referred to as a false negative. But “middle age” heuristics-based defense systems cannot tell such mimicry.

The following image depicts this setting, where the sophisticated attacker’s large traffic volume passes the firewall as a false negative, overwhelming the server such that it cannot respond to the legitimate users’ requests.

Similarly, some legitimate packets may be falsely classified by heuristic rules as attack packets, which is referred to as a false positive. As we illustrate in the section on spam filtering below, even after decades of heuristic rule tuning, such misclassifications continue to occur.

Updating of heuristic rules used by packet filters often involves a manual process by the vendor, or introduce manual exceptions which are hard to manage over time. The challenge is that a newly introduced rule to block an attack may also block legitimate traffic. Further, updating is not done in real-time, leaving a window of opportunity for the attacker to continue his exploit or find other victims with similar deficiencies.

The cumbersome process of updating rules in many modern network defense systems often mirrors the slow, manual methods of the middle ages. Vendors typically manage these updates, which involves a painstaking, hands-on approach. The inherent difficulty lies in the delicate balance between bolstering security and maintaining operational efficiency. A new rule, meticulously crafted to thwart an emerging cyberattack, carries the significant risk of a false positive – inadvertently disrupting legitimate network traffic. This collateral damage can lead to a range of issues, from minor inconveniences to critical system outages, ultimately undermining the very purpose of the defense system. The challenge is not merely technical but also operational, requiring constant vigilance by human operators and a precise understanding of network behavior to detect false positives that can cripple essential business functions.

Comparison to Spam filtering

Consider the persistent challenge of spam filtering, a battle waged for over two decades. Despite 25 years of relentless innovation and the deployment of increasingly sophisticated technologies, the deluge of spam continues to inundate inboxes, often surpassing the volume of legitimate correspondence. This ongoing struggle highlights a fundamental flaw in our “modern” network defense systems.

Even in 2025, with all the advancements in AI and machine learning, we are still plagued by false positives and false negatives. These aren’t minor inconveniences; they represent significant disruptions and, in some cases, critical failures. Take, for example, the well-known issue of falsely marked spam. It is not uncommon for ‘state-of-the-art’ spam filters to suddenly miscategorize emails from a regular contact as spam. This can occur with long-established contacts and might even impact replies to emails one has sent previously. The problem occurs even in cases where the email provider employs state-of-the-art email authentication.

This raises a crucial question: are we truly in 2025, a year touted for its technological prowess, when even the most advanced systems are prone to such egregious and impactful errors? This incident, far from being an isolated anomaly, underscores a deeper, more systemic issue within our cybersecurity infrastructure. It suggests that while we build increasingly complex defenses, they often resemble middle-age weapons in their effectiveness against a constantly evolving threat landscape. The current paradigm of network defense, much like the Sisyphean task of spam filtering, seems to be a continuous effort to plug holes in a leaky dam, rather than fundamentally redesigning the system to be resilient from its core and achieving guarantees for legitimate and critical operations. The persistent reliance on reactive heuristic approaches, however advanced, leaves us perpetually playing catch-up against adversaries who constantly adapt and innovate.

Transitioning to Next-generation Secure Systems

Our “modern” network defense systems are inherently reactive, designed to identify and respond to known threats. This creates a significant lag behind the continually evolving tactics and techniques of sophisticated attackers. These adversaries, often highly skilled and well-resourced, can readily bypass such traditional defenses, exploiting new vulnerabilities or employing novel attack patterns that current systems are not programmed to detect.

This persistent disparity necessitates a heavy reliance on human intervention during active attack periods. Security teams find themselves engaged in a laborious, often manual, process of threat hunting, incident response, and damage control. These attack periods are not fleeting; they can, in many documented cases, extend for days or even weeks. Such prolonged engagements inevitably tie up a substantial portion of valuable employees’ time, energy and money, diverting them from proactive security measures, strategic initiatives, and core business functions. The financial and operational costs associated with this human-intensive defense model are substantial, encompassing not only direct labor expenses but also the opportunity cost of foregone productivity and the potential for significant reputational damage.

A brief Internet-based research suggests that the cost to secure a mid-sized tech company (100-500 employees) with current state-of-the-art network security technology amounts to around USD 500K annually, which based on our experience is a conservative estimate. Given over 10,000 mid-sized software and IT companies in the US alone, costs exceed USD 5B per year only for that country. The return for this money spent is that you get a “maybe, best effort” security, which translates to a bad lock that can be broken without extraordinary effort securing your front door. Thus, a public network that can provide security guarantees would result in a tremendous reduction in cost and an increase in flexibility.

In stark contrast to the outdated approaches described above, SCION emerges as a next-generation network architecture, designed entirely to offer the much needed security guarantees. SCION achieves this through the innovative and pervasive application of cryptographic approaches that are fundamentally insurmountable by an attacker without access to the cryptographic keys. Such an approach ends the cat-and-mouse game between attacker and defender over false positives and false negatives. This cryptographic backbone transforms network security from a reactive, best-effort endeavor into a proactive, provable system, where the integrity and authenticity of communications are mathematically assured. Instead of heuristics-based filters, in this approach access is provided through the distribution of cryptographic keys, and systems can be used to limit the damage in case a key is disclosed to an attacker.

Zero-trust architectures deployed on-premises or in a public cloud emphasize “always verify and always authenticate” amongst other principles. SCION embodies these principles at the inter-domain level. Unlike traditional packet filtering SCION enforces verification through mathematically rigorous, cryptographic proofs embedded in every packet. Each network router can independently validate the packet’s authenticity and path correctness, eliminating reliance on implicit trust or approximations. In this sense, SCION translates the zero-trust mindset into the network fabric itself: only cryptographically verified traffic is elevated to “trusted,” while all else can be isolated.

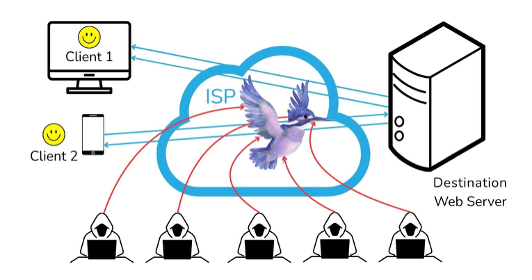

In addition, the commercial SCION network supports Hidden Paths: routes protected by cryptographic keys, where traffic cannot traverse a link without the proper key material and the path itself is invisible to unauthorized network users. Looking ahead, mechanisms such as Hummingbird (for in-network filtering) and LightningFilter (for end-domain protection) will further strengthen the separation between unverified background traffic and verified packets. The following figure shows how an ISP deploying Hummingbird can protect legitimate users’ traffic, and block attacker traffic (assuming that the attackers cannot obtain resource reservations). Even if the attackers can obtain resource reservations, the server can control how much bandwidth is allocated to the adversary on the last link, ensuring that other legitimate users cannot be squeezed out.

Glossary

- Zero-trust architecture: A security model that assumes no user or device can be trusted by default, regardless of whether they are inside or outside the network. Every access request is verified and authenticated.

- Autonomous System (AS): A collection of connected Internet Protocol (IP) network devices under the control of a single entity. An AS can be an Internet Service Provider (ISP) such as Swisscom, or an end domain such as SIX or ETH Zurich.

- Inter-domain vs. intra-domain: Communication within a single AS is referred to as intra-domain, and (global) communication beyond a single AS is referred to as inter-domain.

- Border Gateway Protocol (BGP): An inter-domain routing protocol designed to exchange routing and reachability information among autonomous systems (AS) on the Internet. BGP makes routing decisions based on paths, network policies, or rule sets.

- IP address: A unique numerical label assigned to each device connected to a computer network that uses the Internet Protocol for communication. An IP address serves two main functions: host or network interface identification and location addressing.

- Port number: A number used to identify a specific application running on a network device. When data is sent over a network, it is directed to a specific IP address and port number to ensure it reaches the correct application on the destination device.

- BGP Hijacking: A malicious act where an attacker illegitimately takes control of a group of IP addresses belonging to another Autonomous System (AS). This allows the attacker to reroute traffic intended for the legitimate AS through their own network, leading to denial of service, traffic interception, or other attacks.

- Source IP address spoofing: The act of faking the source IP address of a network packet to hide the true origin of the packet or impersonate another system. This can be used in various attacks, such as denial-of-service attacks or bypassing security measures.